Response Generator Tools for Multi‑Channel Communication: How AI drafts email, chat, and social replies

AI response generators turn messy, multi-channel messaging into clear, on-brand draft content in seconds. They help marketers, sales, community, product, and operations teams collaborate, scale, and stay compliant without sacrificing voice or precision.

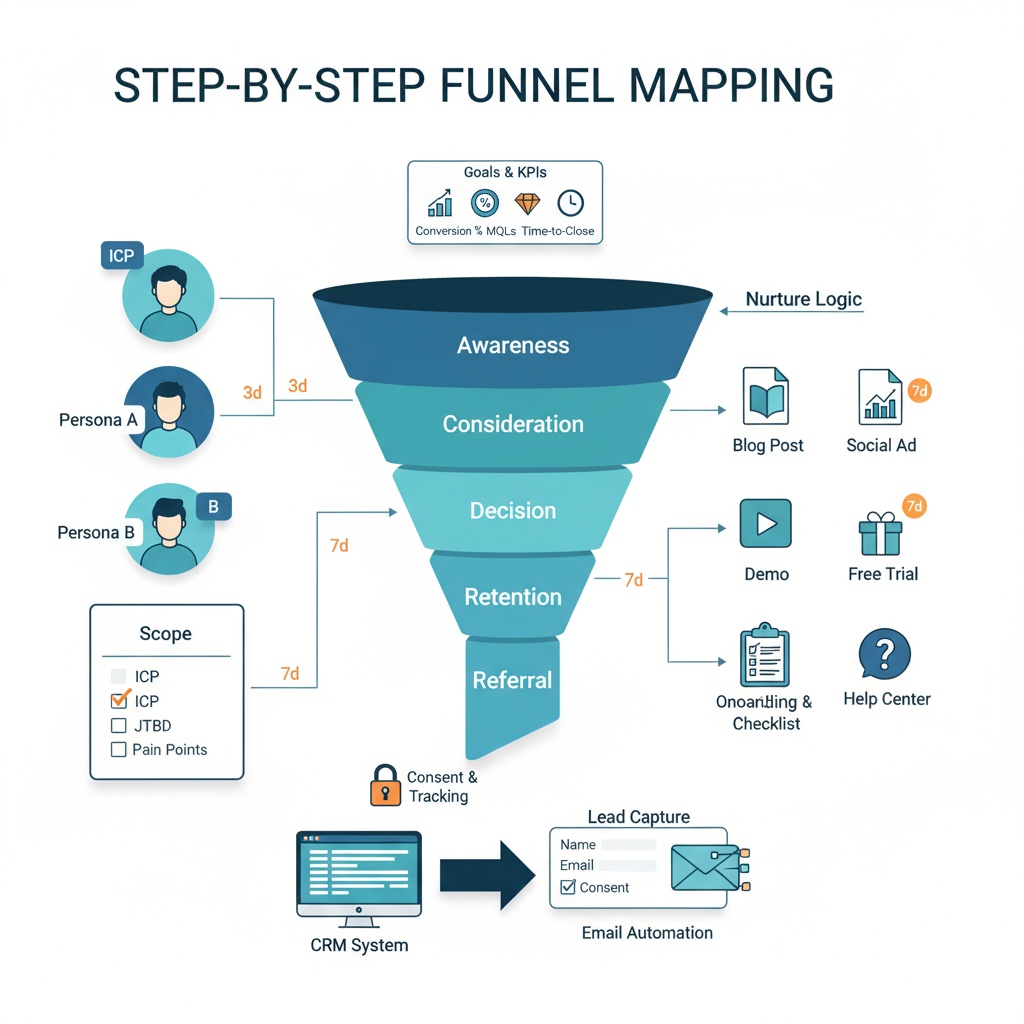

At their core, these systems analyze your prompt and context, use large language models (LLMs) with optional retrieval-augmented generation (RAG), refine for tone and persona, and format for the target channel, then route drafts through approvals to publish.

The result: faster, consistent replies across email, chat, social media, and internal communications.

Who benefits & Why it matters

- Marketers: lifecycle campaigns, newsletters, and announcements that actually get opened

- Sales: chat prequalification, objection handling, and follow‑ups with the right tone

- Community: scalable Q&A, forum replies, and moderation-ready drafts

- Product & Ops: release notes, change logs, and meeting summaries with brand voice consistency

How a modern AI response generator works

- Prompt and context ingestion, including goals, audience, and constraints

- LLM generation with optional RAG for fresher, factual content

- Style, tone, and persona controls for brand voice consistency

- Channel-aware formatting for email, live chat, and social platforms

- Versioning, approvals, and multilingual support for governance

- Analytics for open rate, engagement, conversions, and quality signals

Email essentials: subject line generator, subject line checker, welcome email template

- Subject line generator: creates on‑brand variants for A/B/n tests and tone fit

- Subject line checker: previews length, spam risk, and mobile truncation

- Welcome email template: plugs in personalization, legal footers, and CTAs aligned to onboarding goals

- Compliance by design: CAN‑SPAM, GDPR, and consent policies baked into drafts

Chat and social superpowers

- Memory and persona control to keep context across conversations

- Tone adaptation by audience and funnel stage

- Platform-aware drafts with hashtags, mentions, and optimal length per network

- Escalation cues, citations, and safety filters for high‑trust interactions

Metrics that matter: click rate and click-through rate

- Email: open rate, click rate, click-through rate (CTR), and downstream conversions

- Chat: engagement rate, handoff rate, and lead quality

- Social: impressions, engagement rate, and link clicks

- Observability: detect tone drift, prompt decay, and performance regressions

Safe, compliant, and governed by design

- Data privacy: PII minimization, retention policies, and consent capture

- Safety: prompt‑injection defenses, disallowed topics, and toxicity checks

- Human‑in‑the‑loop: approvals for high‑risk or regulated content

- Bias and fairness: inclusive language and demographic neutrality

From structured prompts to rigorous evaluation and responsible deployment, this guide demystifies the tools, patterns, and practices that make AI response generators reliable at scale. Let’s map the channels, features, templates, and experiments you’ll need to pilot confidently and grow with clear governance and analytics.

What an AI response generator is and how it actually works

A response generator is an AI system that composes human-like replies from prompts, then shapes those replies for the specific channel where they will be delivered. It turns context into clear, on-brand content for email, chat, social, and internal updates. Teams use it to draft faster, route answers reliably, and keep voice consistent across every touchpoint.

Under the hood, this tool follows a predictable pipeline. Knowing each stage helps you choose the right workflows to automate, design safe review loops, and avoid quality drift.

The core pipeline of a response generator

Prompt and context intake

- Users supply a query, a short brief, or a longer context package. Structured context improves results, such as audience, brand rules, and examples.

Large language model generation

- An LLM interprets the intent and produces a first draft. Strong models balance fluency with adherence to constraints.

Retrieval-augmented generation

- For accuracy, the system can pull facts from knowledge bases, CRMs, or policy docs. This reduces hallucinations in product specs, policies, and pricing.

Style and tone post-processing

- The draft is adapted to brand voice, persona, and readability. This can include lexical constraints, sentence length, and jargon filters.

Channel-aware formatting

- The reply is packaged for the destination, such as email headers, chat short-form, or social character limits. Elements like preheaders or hashtags are handled here.

Review and approval

- Human reviewers confirm correctness, safety, and compliance for higher risk content. Approvals can be role-based by channel or campaign.

Publish and archive

- The final response is sent, logged, and linked to analytics. Storing prompts and outputs enables later audits and iteration.

This pipeline is the same whether you are drafting a product update newsletter, triaging a sales chat, or preparing a social post sequence. The differences appear in the inputs, the formatting rules, and the approval rigor.

Governance, privacy, and guardrails that protect the pipeline

A production-grade response generator accounts for privacy and security from the start. Inputs often include sensitive context like names, email addresses, ticket histories, or contract terms. That means you need:

Data privacy scaffolding

- Consent capture, PII minimization, access controls, and retention windows that match your policies.

Safety filters and topic boundaries

- Injection defenses, restricted topics, and brand-specific no-go lists.

Compliance-aware delivery

- Email rules like CAN-SPAM and GDPR, plus channel-specific platform terms.

Bias and accessibility checks

- Inclusive language review, reading grade targets, and clarity checks for different audiences.

For readers who want a broader tour of modern AI tool stacks, a concise starting point is this beginner’s guide to AI tools. If you are comparing the landscape of platforms, it helps to browse the best AI tools and a roundup of top AI response generators to understand feature baselines.

Channels beyond support: where a response generator delivers value

A response generator is not just for ticket deflection. It helps every outward-facing channel move faster without losing quality. The goal is consistent, useful communication that respects each channel’s norms.

Email workflows your team can accelerate

Email remains a high-leverage channel because it mixes long-form storytelling with measurable outcomes. A response generator can assemble content blocks, align voice with reader intent, and localize without reinventing the wheel.

Newsletters and announcements

- Draft concise updates with consistent intros and scannable sections. Include alt text and linked CTAs for accessibility and tracking.

Lifecycle flows

- Build a welcome email template that personalizes benefits based on segment. Expand into onboarding, reactivation, and win-back without duplicating logic.

Sales and success communications

- Create polite nudges, meeting follow-ups, and expansion offers that map to deal stage and account health.

Teams often ask about click rate and click through rate. Click rate counts total clicks over total recipients. Click-through rate uses unique clicks over opens, which isolates engagement among those who saw the email. If you are setting up a measurement plan for email, see our primer on newsletter and email marketing for sender reputation, CTR nuances, and subject line testing.

Foreshadowing Part 2, we will examine how features like a subject line generator and subject line checker contribute to better open signals while preserving compliance and preview fit.

Relevant deep dives:

- Using an AI email response generator for support and sales: setup and tone controls in our email response generator guide

- Designing a helpdesk that integrates with email and CRM in email for customer support

Chat and messaging touchpoints that qualify and educate

Short-form, real-time channels reward precision. A response generator can use memory and context to step prospects through qualifying questions, or to guide attendees through event FAQs without queuing agents.

Sales prequalification

- Ask intent and fit questions, summarize needs, and offer the right next step. Hand off seamlessly to a rep when confidence drops.

Community Q&A

- Provide citations for policy answers and link to canonical docs. Use escalation keywords to trigger human review.

Event support

- Cover registration, logistics, and schedule updates. Route edge cases to an event coordinator.

If you are exploring vendors for these tasks, comparison articles like AI email response generators help you evaluate workflow depth, routing logic, and safety controls across tools.

Social media orchestration that respects each platform

Social channels require tight formatting and a tempo that matches platform culture. A response generator can create multi-variant drafts, align hashtags, and coordinate replies without duplicating effort.

Campaign scaffolding

- Provide long-form context once, then spawn channel-specific variants. Map primary CTA to the platform most likely to convert.

Community replies

- Triage mentions, draft polite responses, and flag sensitive posts. Keep tone consistent even when threads escalate.

Scheduling and pacing

- Balance promotional posts with educational or community content. Reuse pillars while avoiding repetition fatigue.

For a broader tools view that includes video and multimodal options relevant to social teams, you can review this curated list of the best AI tools.

Internal communications that keep teams aligned

Internal updates have different stakes, but the quality bar is high. A response generator helps document changes with clarity and context, then distributes those updates where people actually read them.

Release notes and change logs

- Summarize commits into user-facing updates and internal diffs. Tag components, affected user segments, and rollback notes.

Meeting summaries

- Convert transcripts into action items with owners and due dates. Reduce time spent consolidating decisions across time zones.

Operational updates

- Produce clear service notices and escalation paths. Keep archive links standardized for audits.

Channel comparison at a glance

| Channel | Typical purpose | Example outputs | Approval rigor | Latency expectations | Primary engagement signal |

|---|---|---|---|---|---|

| Nurture, announce, convert | Newsletter, onboarding sequence, product launch | Medium to high for external sends | Hours to days | Open and click rate | |

| Chat/Messaging | Qualify, support, guide | Sales prequalification, FAQs, in-app tips | Medium with auto-handoff | Seconds | Session engagement |

| Social | Reach, converse, amplify | Post variants, comment replies, campaign threads | Medium for branded posts | Minutes to hours | Impressions and interactions |

| Internal | Align, document, decide | Release notes, change logs, summaries | Low to medium by policy | Minutes to hours | Read receipts and acknowledgments |

If your marketing funnel relies on both organic and paid acquisition, aligning these channels with landing page intent is essential. Our guide on search engine optimization vs pay per click outlines cost and timeline tradeoffs that influence your channel mix and how you staff response generation.

Implementation roadmap for a pragmatic response generator pilot

You do not need to automate everything at once. A narrow pilot yields better signal and informs your governance model.

Pick one flow per channel

- For email, choose a single lifecycle moment such as the welcome email template. For chat, select a crisp prequalification path. For social, prototype a campaign with two platforms.

Define success up front

- Identify the behavioral outcomes you want. For email, consider improvements in open signals and click rate. For chat, track engagement and handoff quality. Keep instrumentation lightweight at first.

Build a prompt and style system

- Document brand voice, tone ladders, and do-not-say lists. Provide approved snippets and examples to stabilize outputs.

Stand up approvals and logging

- Create review tiers by risk level. Store prompts, sources, and final outputs so QA can trace decisions.

Integrate with your stack

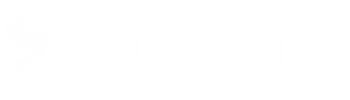

- Connect CRM and analytics, then map outputs to your sales funnel templates to preserve attribution and stage progression.

Pilot results will highlight friction points such as missing context, unclear tone rules, or overly manual approvals. Iterating on these basics prevents scale from magnifying small errors.

Pitfalls to avoid when scaling your response generator

Teams often run into predictable traps. Address these early so the pipeline stays reliable.

Overfitting to one channel

- If you tune the system solely for support tickets, it will underperform for campaigns. Rotate test cases across email, chat, social, and internal content.

Compliance drift

- Add automated checks for risky phrases and policy violations. Maintain a changelog of rules so reviewers know what changed and why.

Vague prompting and weak context

- Provide role, audience, constraints, and examples every time. Keep a living prompt library to reduce variance.

Misreading click signals

- Click rate and click through rate are not interchangeable. Click rate is total clicks divided by recipients. CTR is unique clicks divided by opens, which better reflects message relevance for those who saw it.

For a structured overview of the broader market and emerging patterns, articles such as the ultimate guide to automated messages and a survey of top AI response generators provide useful perspective. If review replies are part of your strategy, a focused review response generator resource can help calibrate tone across marketplaces.

From foundations to hands-on: what Part 2 will unpack next

With the pipeline and channels mapped, the next step is mastering the levers that make a response generator predictable in production.

In Part 2, we will dig into the specific features teams rely on for email, chat, and social, including how a subject line generator and a subject line checker influence performance at the top of the funnel.

We will also walk through a universal prompt template structure that keeps outputs on-brand, then show how to evaluate results in-market using open signals, CTR, and engagement experiments, with practical dashboards and governance patterns you can apply immediately.

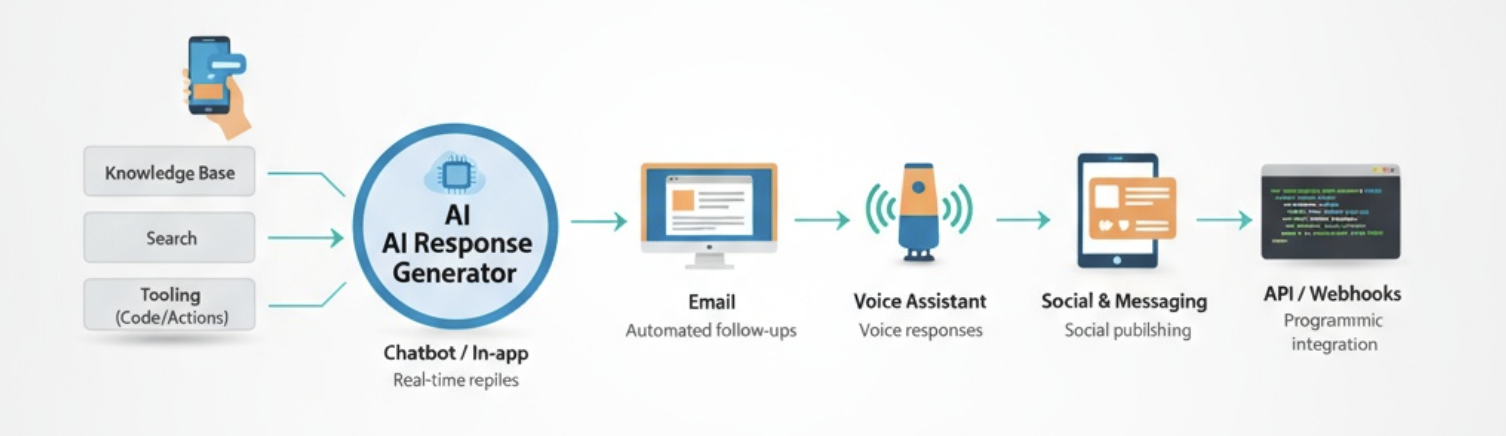

From Pilot to Program: Operationalizing a Response Generator Across the Org

Shifting from a small proof of concept to a durable, multi-team program means elevating standards, instrumentation, and governance. The focus moves from “can it write?” to “can it consistently produce the right message, in the right place, under real constraints, with observable impact?”

The following sections detail how to harden your approach, evaluate tools beyond surface features, and design measurable experiments that stand up to scrutiny.

A deeper feature scorecard that predicts real-world success

Surface-level checklists miss the operational realities that break at scale. Build a weighted scorecard that captures the following dimensions:

- Determinism controls: temperature, top-p, and prompt pinning that stabilize outputs across runs, especially for regulated content and sales cadences where variance can derail messaging.

- Prompt lifecycle management: versioning, rollback, approval routing, and a changelog tied to performance deltas so you can correlate creative changes to shifts in open rate and downstream conversions.

- Compliance by construction: role-based access, policy-aware templates, auditable logs, and automated redaction of PII before prompts leave your environment. For finance or health, require inbound classification that blocks disallowed topics before generation.

- Deliverability lab for email: a subject line generator that pairs with a subject line checker capable of spam trigger audits, preview text fit, mobile inbox rendering, and seed-list inbox placement checks. Combine this with a pre-send throttle to avoid domain reputation spikes during experiments.

- Retrieval orchestration: documented connectors, vector refresh policies, and query filters that prevent outdated details from contaminating responses. Pair with transparent citations for chat and social replies when authoritative grounding matters.

- Latency and throughput: service-level targets and queueing strategies that maintain response timeliness in chat and during social surges, with fallbacks to cached snippets if the model is slow.

- Safety and prompt-injection resilience: red-team tests, jailbreak detection, and response linting to enforce brand glossary and tone boundaries.

Score each area by impact and difficulty. This prevents overvaluing flashy UI features over the operational capabilities that keep quality stable as volume grows.

Turning a universal prompt template into a durable system

A universal prompt template only adds value when it is modular, data-aware, and enforceable across channels. Treat it as a schema:

- Role and goal are fixed components. They set intent and accountability for the generator.

- Audience, channel, and constraints are parameterized. They source from CRM, preference centers, and legal policies.

- Examples and data are dynamic. They reference a library of approved samples, live inventory or release notes, and recent customer events.

- Evaluation criteria are executable. They tie to automated checks that grade outputs for tone, safety, lexicon compliance, and factual grounding.

Apply this to a welcome email template without repeating boilerplate. For a two-touch onboarding sequence, specify:

- Touch 1 objective: attention capture and primary CTA micro-commit.

- Touch 2 objective: activation milestone and preference collection.

- Inputs: last referrer, product tier, device type, geography.

- Constraints: 50–65 character subject line, unique preheader, CAN-SPAM compliant footer, accessible HTML, voice rules from the brand guide.

- Guardrails: blocked claims list, negative keyword filter, and a data freshness threshold for referenced features.

For teams that want a deeper breakdown of email orchestration, link the template system to your CRM and ESP architecture using the guidance in newsletter and email marketing strategy and AI email response generator for support and sales.

Measurement architecture that goes beyond vanity indicators

Define metrics with precision so teams interpret results the same way:

- Open rate: unique opens divided by delivered messages, adjusted for Apple MPP where relevant by using proxy engagement events.

- Click rate: unique clicks divided by delivered messages.

- Click through rate: unique clicks divided by unique opens.

- Conversion rate: conversions divided by delivered messages or sessions, depending on the analysis layer.

To compare click rate and click through rate without confusion, lock definitions in your experimentation wiki and annotate dashboards. For chat, track engagement rate, handoff rate, and lead quality via CRM-opportunity linkage. For social, prioritize per-post save rate and profile click depth when top-of-funnel reach is inflated by algorithmic boosts.

Instrument events end to end:

- Use consistent user and campaign IDs across ESP, web analytics, and CRM.

- Store prompt version IDs with each send and reply.

- Log automated quality scores for every message so you can segment performance by the underlying prompt and safety filters.

When testing, use sequential A/B/n with traffic shaping to avoid audience fatigue. For large catalogs or fast-moving feeds, consider a contextual multi-armed bandit that adapts allocation while holding out a stable control. Stop tests based on pre-registered power calculations, not a noisy midstream spike.

Real-world examples show the value of disciplined measurement. An ecommerce brand using a review response generator increased repeat purchase rate by pairing sentiment-aware replies with time-limited incentives, modeled on patterns discussed in review response generator for ecommerce and the practical guide on how to use AI review responses.

Meanwhile, a B2B team tuned LinkedIn reply prompts with citations to product docs and saw a lower handoff rate but a higher qualified pipeline per conversation because sales entered fewer dead-end threads.

Channel-specific playbooks that respect domain constraints

- B2B SaaS: Inbound chat on pricing pages should pre-qualify with 3 intent probes and one friction-reducing offer to book time. The response generator must maintain memory across the conversation and surface the exact discount policy without leaking internal thresholds. Connect this to sales funnel templates to align capture, nurture, and demo stages.

- Healthcare: Email content must avoid diagnostic language and defer to approved care instructions. The subject line checker should flag clinical terms and route to clinician review. Use short-lived tokens for protected links and never include appointment details in subject lines.

- Fintech: Social replies should cite rate sources and link to a knowledge base entry with timestamped data. Add an escalation instruction when users ask for personal account actions to keep transactions off-platform.

- Hospitality: Welcome sequences benefit from geotargeted recommendations and weather-aware preheaders. Pair a subject line generator with itinerary snippets pulled at send time for relevance without bloating the message body.

Counterarguments, constraints, and how to engineer around them

Critics warn about brand dilution and tone drift when teams over-automate.

Solve this with a two-tier system: lightweight automation for low-risk interactions and human-in-the-loop reviews for high-impact moments like regulatory announcements or sensitive community threads. Maintain a brand lexicon that lists must-use phrases, blocked terms, competitive references, and reading-level targets. Enforce it with automated linting before human review.

Hallucinations are rare with tight prompts and retrieval, but not zero risk. Add a citation requirement for claims, a disallowed content filter, and a fallback to approved snippets when confidence scores drop. Circuit breakers should pause a campaign if spam complaints or bounce rates exceed thresholds that threaten sender reputation, then force a review of the subject line checker rules and contact hygiene.

Implementation blueprint that unblocks scale

Organize delivery around a cross-functional pod:

- Product owner defines roadmap and success metrics.

- Content lead owns prompts, lexicon, and editorial QA.

- Data analyst builds dashboards and runs tests.

- Marketing ops integrates ESP, CRM, and web tagging.

- Security and legal set policies and conduct audits.

- Channel managers own day-to-day execution and feedback loops.

Work in two-week sprints with a standing backlog of prompt improvements tied to performance findings. Structure your prompt library by channel and lifecycle stage, then store variants with semantic tags and outcome metrics.

Establish a prompt registry with owners, review cadences, and retirement dates for stale assets. For support and operations use cases, incorporate shared inbox and helpdesk setup to route context into prompts and preserve SLA integrity.

Tooling landscape with pragmatic selection criteria

A crowded market makes it tough to compare platforms. Shortlist candidates using curated reviews of top AI response generators and side-by-side evaluations in best AI tools 2025. For email-heavy programs, explore AI email response generators with strong deliverability features.

If your priority is cross-channel content, examine an AI response generator guide that emphasizes governance. Beginners can triangulate fundamentals with an approachable AI tools explained video to align non-technical stakeholders.

For internal link equity and deeper practice materials, align your channel strategy with newsletter and email marketing strategy, refine your outreach cadences using sales funnel templates, and extend your service workflows with email for customer support.

Advanced experimentation ideas to unlock compounding gains

- Subject line ideation at scale: Spin up a weekly slate of contenders from your subject line generator, but gate them through a tone-distance check from last week’s winner to avoid convergence that bores the audience. The subject line checker then screens for previews that complement the hook without duplicating it.

- Behavioral branching in onboarding: Use a welcome email template that forks based on first-session depth, device, and timezone. For low-engagement branches, reduce cognitive load with a single CTA and a progress indicator. For high-engagement branches, add a power-user tip and a calendarized webinar invite.

- Cross-channel lift tests: Pair a social reply campaign with an email reactivation nudge, then measure blended lift in opens and site sessions. Attribute assists by tying reply IDs to ESP journeys.

The next stage introduces production-grade observability, multi-market localization, and a governance rhythm that keeps creative fresh while reducing risk.

Conclusion

Ultimately, multi‑channel communication succeeds when you align the tool with clear objectives, structure your prompts, and enforce approvals. The evidence shows that this discipline delivers consistent voice, faster production, and measurable lift across email, chat, social, and internal updates.

Email execution sets the tone for everything else. Generate strong options with a subject line generator, validate deliverability and preview fit with a subject line checker, and launch sequences from a refined welcome email template library. Pair these with compliance controls and you secure inbox placement, clarity, and trust.

Measure what matters and act on it. Track open rate, click rate and click‑through rate alongside conversions, run focused A/B/n tests, watch for tone drift, and segment results by audience. This turns content into a controllable growth lever instead of guesswork.

Governance is non‑negotiable. Protect data, neutralize risky prompts, uphold platform rules, and keep a human in the loop for sensitive outputs. With these guardrails, scale does not erode quality or safety.

In conclusion, select the response generator that fits your channels and goals, launch a tight pilot, define success with hard metrics, and expand through a maintained prompt library and brand style system. Start now, prove the uplift fast, and compound impact as you roll the framework across every customer touchpoint.

Frequently Asked Questions

An AI response generator creates on-brand drafts for multiple channels—email, chat, social, and internal notes—using structured prompts, style controls, and an optional approval workflow. A traditional chatbot focuses on real-time back-and-forth dialogue, often in a single channel. Response generators emphasize multi-channel formatting and human-in-the-loop review, with analytics and governance baked in, making them better suited for campaigns, lifecycle messages, and reusable templates.

Marketing, sales, and community teams usually see quick gains by automating repetitive drafts like newsletters, outreach snippets, and event FAQs. Start a pilot with one email flow (e.g., onboarding), one chat scenario (lead prequalification), and one social campaign (launch posts). Define success metrics up front (open rate, CTR, and conversions), assign one content owner plus a reviewer, and expect useful results within 2–4 weeks as you build a reusable prompt library.

Use a structured prompt template: Role + Goal + Audience + Channel + Constraints + Examples + Data + Evaluation Criteria. Include your brand voice rules, glossary, and disallowed phrases to anchor style/tone controls. Provide short, high-quality examples and specify the persona (e.g., “helpful, concise, B2B marketer”) to reduce drift. For repeatable tasks, turn prompts into templates with variables (product, audience segment, CTA) and require brief reviewer notes for continuous improvement.

Generate 10–15 options with a subject line generator across tones (curious, urgent, benefit-led), then validate each with a subject line checker for length, preview text fit, and spam risk. Pair subject lines with strong preview text, avoid overused clickbait, and test 2–3 variants per send. Aim for enough volume to detect a real open rate difference (e.g., several hundred opens per variant), rotate winning angles, and refresh monthly to prevent fatigue.

Email click rate is clicks divided by total delivered emails; click-through rate (CTR) is clicks divided by opens. Click rate indicates overall list-level performance, while CTR isolates message effectiveness among those who opened. Optimize both: test subject lines to lift opens (affects click rate) and refine copy/CTAs to lift CTR. Track conversions to tie improvements to revenue, and segment results by audience for cleaner insights.

Use RAG (retrieval-augmented generation) when accuracy matters—product specs, policies, pricing, or regulated content. Connect only vetted sources (knowledge base, release notes), enable retrieval filters by recency and permission, and log citations for reviewers. Protect user data with PII minimization, anonymization, and strict retention policies. For speed, cache frequent answers and set automated fallbacks (e.g., escalate to human) when confidence is low.

Add automated checks for CAN-SPAM (clear sender, physical address, and easy unsubscribe) and GDPR (legal basis, consent tracking, data minimization). Bake compliance clauses into templates, restrict use of sensitive attributes, and require human-in-the-loop approval for high-risk topics. Maintain audit logs, define retention windows, and include safety filters against disallowed topics or prompt injection. Run inclusive language checks to reduce bias.

Use A/B/n testing with one variable at a time (subject line, CTA, or tone), and apply sequential testing to reduce audience fatigue. In email, run tests for a full send cycle or until you hit statistical significance (e.g., several hundred opens/clicks per variant). In chat, compare engagement and qualified lead rates; in social, measure impressions and engagement by platform. Maintain observability dashboards to track tone drift and prompt performance over time.

Common causes are vague prompts, missing context, or tone drift over time. Tighten your style/tone controls, provide short examples of “good vs. bad” copy, and set a clear persona per use case. For accuracy, enable retrieval from approved sources, include constraints (e.g., “don’t infer pricing”), and lower temperature for factual content. Add a final compliance pass and route low-confidence responses to human review.

Yes—have it produce a welcome email template with a value-led headline, a single focused CTA, and lightweight onboarding steps (e.g., “Set up profile,” “Join community”). Personalize with name and plan, restate the core benefit, and include a compliant footer. Use a subject line generator to test curiosity vs benefit angles, then refine with a subject line checker. Measure open rate, CTR, and day-7 activation to confirm impact.